Lattice

A Slack bot that transforms conversations into Jira tickets and GitHub PRs using MCP. Lattice uses an LLM to parse thread context and automatically generate well-structured tickets or draft pull requests, keeping Slack as the single interface for developer workflows.

I landed in Toronto on Friday morning after a work trip to Bellevue. By Friday afternoon, I was in the car with Evan to Waterloo on about 3 hours of sleep. You could say that that was not the smartest way to start a hackathon.

The idea for Lattice came from a problem we kept running into as interns: Slack is where all the good conversations happen—bugs get discussed, features get brainstormed, decisions get made—but then someone has to manually write an issue or feature in a ticket system. Context gets lost, things slip through the cracks, and it's just tedious. We wanted to build something that could turn those Slack conversations directly into action.

What Lattice Does

Lattice is a Slack bot that sits on top of an MCP (Model Context Protocol) architecture. When you mention the bot in a thread, it reads the conversation context and figures out what you're trying to do. It'll create a Jira ticket with a proper summary and description pulled from the discussion. It'll then draft a PR with the right branch name, title, and body. The whole point is that you never have to leave Slack—Lattice handles the boring stuff.

Technical Deep Dive

We built Lattice as a Slack bot using Bolt (Slack's SDK) with Socket Mode. One of the trickiest parts was parsing messy Slack conversations into structured data—people don't write bug reports, they vent, add emojis, go on tangents, and leave half-finished thoughts. We used Cohere to extract structure from this chaos:

services/cohere_service.pydef parse_bug_report(self, conversation): formatted_conv = "\n".join([ f"{msg['user']}: {msg['text']}" for msg in conversation ]) prompt = """Extract and structure information into a clear bug report. Extract: title, description, steps_to_reproduce, expected_behavior, actual_behavior, severity, affected_components, additional_context"""

The core of Lattice is a two-pass approach for generating code fixes. Instead of blindly generating patches, we first locate the exact change target with a confidence score. If the model isn't confident (below 0.6), it flags for manual review rather than generating a bad PR:

mcp_server.py# Pass A: Locate exact change target with confidence location = self._locate_with_deimos(bug_report, code_context) if location.get('confidence', 0) >= 0.6: # Pass B: Generate minimal unified diff fix = self._generate_patch_with_deimos(bug_report, target_file['content'], location) else: fix = {"root_cause": "Manual analysis required"}

We used Martian's Deimos Router (a sponsor at HTN) to handle model routing. Different tasks need different models—you don't need GPT-4 for simple text formatting, but you definitely want it for code analysis. This saved us money during testing and made responses faster for simpler tasks:

services/deimos_router.pytask_rule = TaskRule( name="lattice_task_router", triggers={ "locate_change_target": "openai/gpt-4o", # Code analysis "generate_patch": "openai/gpt-4o", # Code generation "parse_bug_report": "cohere/command-r-plus", # NLP parsing "pr_description": "openai/gpt-4o-mini", # Simple task → cheaper model } )

The Struggles

Honestly, this project was rough. MCP was brand new to all of us, and the Martian documentation was very confusing to understand. We spent hours debugging cryptic errors that turned out to be environment mismatches between our setups. Integrating Slack, the LLM, and the MCP server meant a lot of moving pieces that could (and did) break independently.

And then there was the sleep deprivation. Between Friday and Sunday, I got maybe 7 hours of sleep total. By Sunday morning, my brain was running on autopilot.

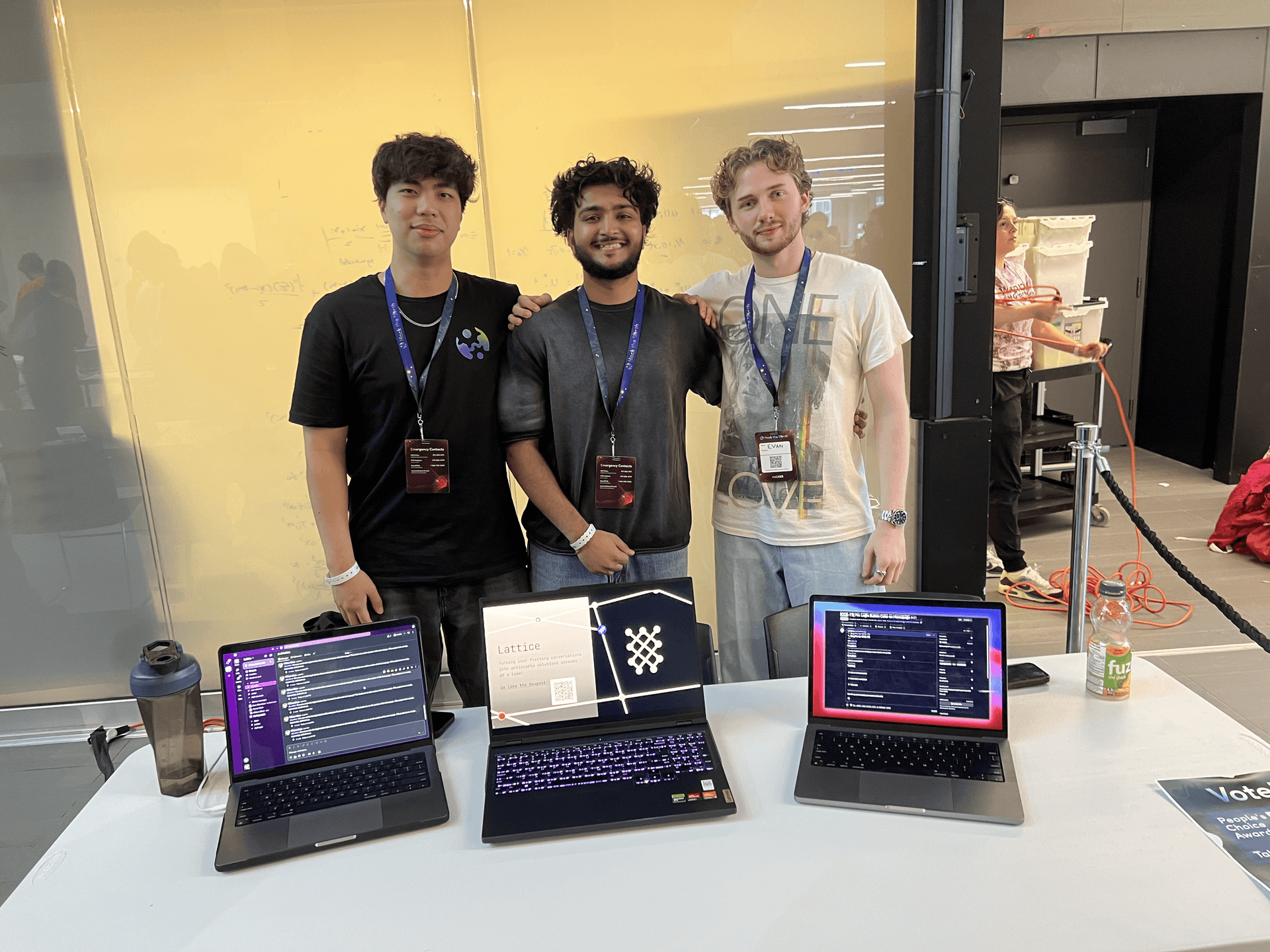

Making Finals

Despite everything, we made it to the final round—top 10% out of 261 teams. Walking up to present was surreal, especially given the state we were in.

One of our judges was Marcelo Cortes, co-founder of Faire. His feedback was both validating and humbling—he mentioned that Faire actually has something similar in their internal tools. On one hand, it meant we were solving a real problem. On the other hand, well... we didn't win anything.

But honestly? I'm proud of what we built. None of us had used the Cohere API before. None of us had built a Slack bot. Getting Deimos Router to work was its own mini-victory. For a weekend project on almost no sleep, Lattice came together better than I expected.

What I Took Away

MCP's architecture is genuinely powerful for making tools modular and reusable (it helped me with what I was doing at my internship at the time!). The key is designing clear schemas so the LLM can reliably call the right tools. The two-pass approach with confidence thresholds taught me something important: sometimes the best feature is knowing when not to do something.

But mostly, I learned that I can pick up unfamiliar tech fast when there's a deadline. The skill of breaking down something new and applying it under pressure feels more valuable than any specific tool we used.